- Software DevelopmentIT Consulting & DesignAI & Data SolutionsQuality AssuranceTeam & Resource SupportBusiness Support Services

A powerful, Python-powered RPA and middleware platform tailored for the chemical industry, automating data ingestion, cleaning, and processing across millions of diverse supplier records—ensuring real-time visibility, data integrity, and scalable operations.

Chemical Business sources millions of product listings from both direct supplier catalogs and scraped supplier websites. To fully automate ingestion and normalization of this vast catalog, we built a distributed, Python-powered RPA and middleware platform that dynamically dispatches scripts across multiple servers and client nodes.

Chemical Business is a leading provider of IT Automation and Data Management solutions for the chemical industry. They specialize in streamlining complex workflows—ranging from data acquisition and validation to advanced reporting—so that their clients can focus on innovation and compliance rather than manual data handling.

IT Automation/Data Management

RPA, Middleware development, Automation

Comprehensive solutions designed to enhance user experience and drive business growth.

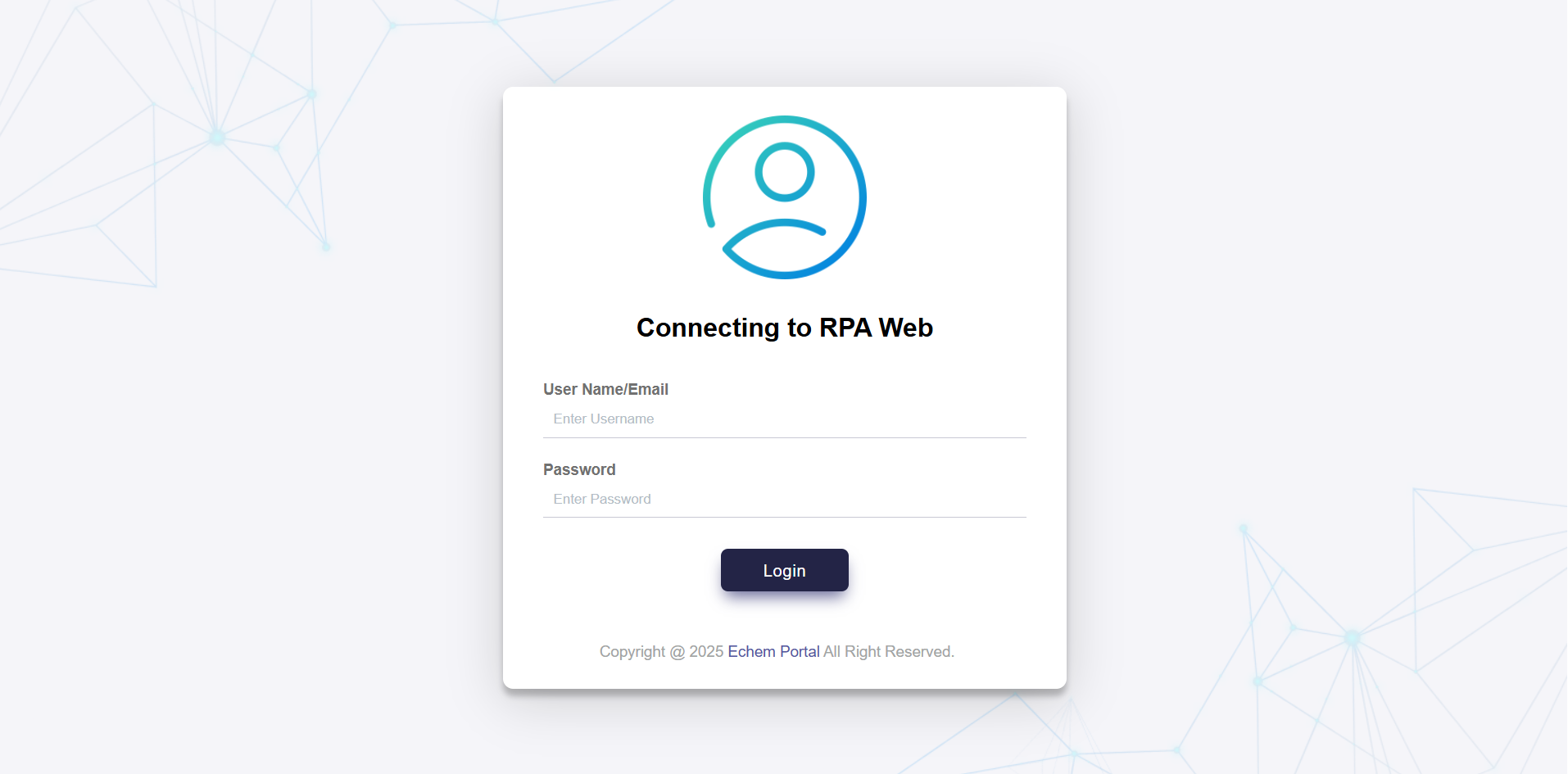

Overview of the system.

Create, manage, and monitor Robotic Process Automation workflows integrated with internal systems or databases.

Analyze and validate database structures, entries, and integrity checks across environments.

Specialized chemical indexing tool—likely for SMILES/InChI/CAS-based mapping, useful for chemical data automation.

Global settings for RPA bots, Server & Code Management, Configuration, User Management, etc.

File Management, Site-based Exception Handling.

Validate and manage bulk emails, likely including verification, bounce-checking, or clean-up for automated communication pipelines.

Bulk import tool for “Bingo” (probably a product module or third-party app) data into the system.

Auto-generate and manage images dynamically based on application inputs or workflow steps.

Role-based access control for managing users, permissions, and module-level security enforcement.

We identified key pain points and developed targeted solutions to transform the resort's digital presence.

Handling millions of product records from a mix of direct supplier catalogs, website scrapes, and third‑party feeds—each with its own format, schema, and refresh cadence—made scheduling, ingestion, and normalization highly complex.

Supplier catalog exports and public websites evolve regularly, causing occasional schema drift or layout changes that can break scraping scripts or import routines.

Raw feeds often contained duplicates, missing or malformed identifiers (SMILES/InChI/CAS), inconsistent taxonomy, and invalid field values, undermining data integrity and downstream reporting.

Without centralized monitoring, it was difficult to detect pipeline failures (e.g. scraper timeouts, import errors, or image‑generation faults) quickly and to trace them back to their root cause.

Newly imported products often lacked photography; generating meaningful placeholder or metadata‑driven images automatically, without manual intervention, added another layer of complexity.

Built a React.js console that schedules and monitors all pipeline stages—scraping, cleaning, indexing, importing, validation, and image generation—with real‑time job metrics, logs, alerts, and role‑based access control.

Developed a library of Python “source adapters” for each catalog type or web target. Each adapter encapsulates extraction logic (API calls, CSV/XML parsing, or headless‑browser scraping) and can be patched quickly when source formats change.

Implemented staged, reusable Python modules to deduplicate records, normalize data types, enforce taxonomy mappings, and apply business‑rule validations—ensuring only clean, standardized data is bulk‑loaded into SQL Server/MySQL.

Created a database‑profiling tool that scans for anomalies (orphan records, mismatched identifiers, schema drift) and either flags issues for review or applies safe, rule‑based corrections automatically.

Deployed a scalable Python‑worker framework that dynamically assigns jobs across servers and client nodes, with auto‑retries on failure, health‑checks, and versioned script deployments to minimize downtime.

Built an image‑generation microservice that composites product metadata onto branded templates or placeholder graphics, triggered automatically for new imports and delivering assets to a CDN.

Visual highlights showcasing the transformation and key features of the new website.

Let's discuss your project and create a custom web application that drives your business forward. Get started with a free consultation today.

Automation and middleware brought scale, agility, and visibility to data operations in the chemical industry.

Reduced manual catalog updates from days to minutes—handling millions of records without human intervention.

Automated anomaly detection and correction slashed data-quality incidents by 90%, ensuring that downstream analytics and reporting are rock-solid.

Hot-patch Python scripts and spin up/down capacity on demand, so new supplier integrations roll out in hours, not weeks.

The Central console gives stakeholders a single pane of glass—process health, resource utilization, error trends, and KPIs—enabling proactive issue resolution.