Loading content...

- Software DevelopmentIT Consulting & DesignAI & Data SolutionsQuality AssuranceTeam & Resource SupportBusiness Support Services

Discover how the Model Context Protocol (MCP) powers intelligent, secure, and scalable web agents — transforming customer experiences and developer workflows through standardized AI tool integration.

MCP acts like USB-C for AI — a universal connector for data and tools. (Provided Research)

Scoped permissions, redaction, and observability ensure enterprise-grade safety. (Provided Research)

Multi-agent context sharing improves reasoning and collaboration. (Provided Research)

Loading content...

Let's discuss your project and create a custom web application that drives your business forward. Get started with a free consultation today.

If your website or app still treats AI as a bolt-on chatbot, you're leaving value on the table. The next wave of AI chatbots for websites goes beyond FAQs.

Modern assistants schedule meetings, search product catalogs, generate quotes, triage tickets, and coordinate with internal systems. To do that reliably, an agent needs access to context across many services.

That's exactly where the Model Context Protocol (MCP) comes in. Think of MCP as USB-C for AI tools and data.

It standardizes how an AI agent connects to files, databases, SaaS apps, and internal APIs so the agent can understand your world and act within it. This article explains what MCP is, how it changes multi-agent context sharing, where it's used most, how to integrate it in React and .NET apps, the architecture you'll need, real business impact, and what to watch out for when you adopt MCP.

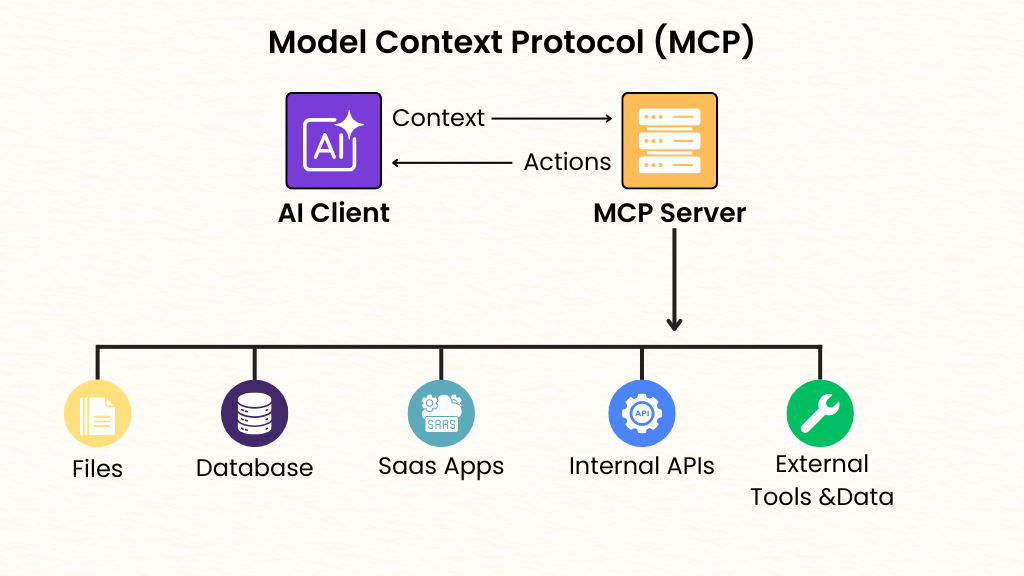

In plain English, the Model Context Protocol is an open standard that lets AI systems talk to external tools and data sources in a consistent, secure way.

Instead of writing one-off connectors for every source (Google Drive, Slack, your CMS, your ticketing system), you connect your agent to MCP servers.

Each server exposes resources (readable data) and tools (actions, such as createTicket or searchOrders) over a standardized interface.

Your AI client, regardless of model vendor, can discover capabilities, request context, and trigger actions through that one interface.

You can think of it as a universal translator that lets your AI model talk to the tools it needs to use.

MCP makes sure that communication is always the same, no matter if you're using OpenAI, Anthropic, or a local model.

You don't need to write different API code for each platform.

This standardization not only makes it easier to link things, but it also makes AI agents safer and more predictable because all connections follow the same rules and permissions.

One protocol instead of many custom adapters.

Agents can read from multiple systems and stay grounded.

Registered and permissioned tools make actions explicit.

Swap models or add tools without rewriting code.

Traditional agents suffer from context fragmentation. One bot knows your docs, another knows your CRM, and neither can act across both.

With MCP, multiple agents can share the same set of resources and tools, or work with scoped subsets based on role.

Example : A Sales Assistant can read product specs and pricing while a Support Triage agent can search tickets and create follow-ups.

Both can use a shared Customer 360 resource to see account health.

They coordinate through MCP servers rather than bespoke APIs, so new capabilities are introduced once and reused across agents.

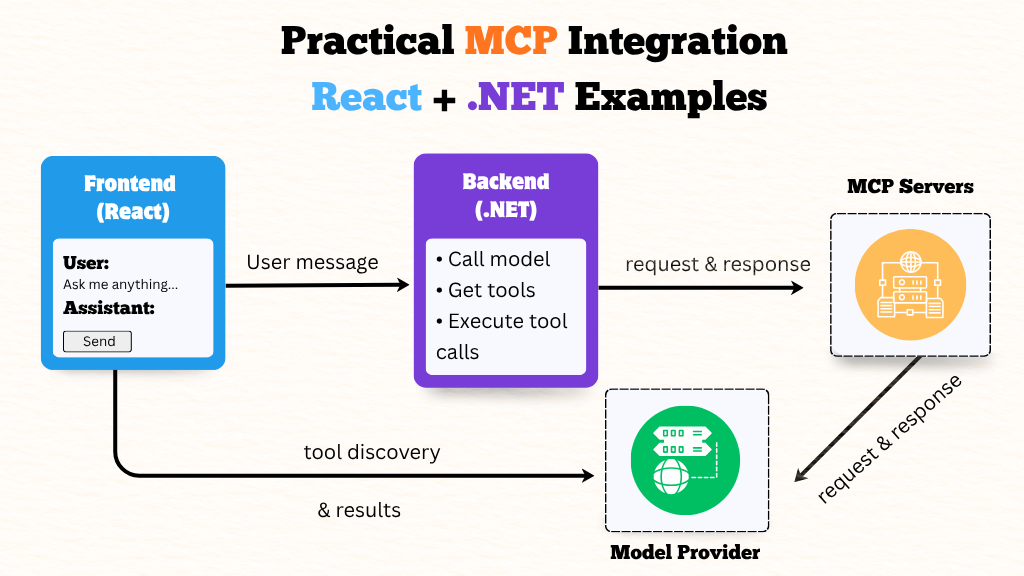

To build a conversational web agent powered by the Model Context Protocol (MCP), you need a few moving parts — each with a clear role in the system.

Here's how everything works together when a user sends a message:

searchKnowledgeBase) via MCP.createTicket) through the MCP server.This structure keeps the communication between model and data sources clean, auditable, and consistent.

Let's look at how you could wire up a minimal conversational agent that uses the Model Context Protocol (MCP).

The React frontend handles the chat interface, and the .NET backend orchestrates communication between the user, the LLM, and MCP servers that expose real tools and data.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

import React, { useState } from "react";

export default function ChatWidget() {

const [messages, setMessages] = useState([]);

const [input, setInput] = useState("");

async function sendMessage() {

const userMsg = { role: "user", content: input };

setMessages([...messages, userMsg]);

setInput("");

// Send to backend for LLM + MCP orchestration

const res = await fetch("/api/chat", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ messages: [...messages, userMsg] })

});

const data = await res.json();

setMessages(prev => [...prev, data.assistant]);

}

return (

<div style={{ width: 400, padding: 10 }}>

<div style={{ height: 300, overflow: "auto", border: "1px solid #ddd", marginBottom: 10 }}>

{messages.map((m, i) => (

<div key={i} style={{ margin: "5px 0" }}>

<b>{m.role}:</b> {m.content}

</div>

))}

</div>

<input

value={input}

onChange={e => setInput(e.target.value)}

placeholder="Ask me anything..."

style={{ width: "80%" }}

/>

<button onClick={sendMessage}>Send</button>

</div>

);

}1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

using Microsoft.AspNetCore.Builder;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Hosting;

using System.Text.Json;

var app = WebApplication.CreateBuilder(args).Build();

// Chat endpoint — acts as orchestrator between user, LLM, and MCP servers

app.MapPost("/api/chat", async (HttpContext ctx) =>

{

var payload = await JsonSerializer.DeserializeAsync<ChatPayload>(ctx.Request.Body);

// 1. Discover available tools from your MCP server

var tools = await McpClient.ListToolsAsync("wss://mcp.your-company.internal");

// 2. Call the model with user messages + available MCP tools

var llmResponse = await LlmClient.CallAsync(payload.Messages, tools);

// 3. If the model wants to use a tool (e.g., “createTicket”), execute it

if (llmResponse.ToolCall != null)

{

var result = await McpClient.ExecuteToolAsync("wss://mcp.your-company.internal", llmResponse.ToolCall);

// 4. Send the tool result back to the model for final reasoning

var final = await LlmClient.CallAsync(

payload.Messages.Append(new Msg { role = "tool", content = JsonSerializer.Serialize(result) }).ToList(),

tools

);

await ctx.Response.WriteAsJsonAsync(new { assistant = final.AssistantMessage });

}

else

{

await ctx.Response.WriteAsJsonAsync(new { assistant = llmResponse.AssistantMessage });

}

});

app.Run();The React widget sends the user message to /api/chat.

The .NET backend:

searchOrders or createTicket).This keeps your backend clean — no more brittle integrations or custom SDKs per system.

You can plug in new MCP servers (like CRM, ERP, or analytics data) and the AI will automatically understand what tools it can use.

When agents can both read context and take action, customer journeys become smoother and cheaper.

Example: A customer says, “My shipment is late.” → Agent checks orders, confirms delay, offers reshipment, and creates a support ticket — all through MCP tools with full logging.

checkOrderStatus commonly reduces tier-1 volume by double digits.A user asks, “My shipment is late—can you help?” The agent calls searchOrders, confirms the order, checks carrier status, and offers a reshipment.

If the user agrees, it calls createTicket with priority medium and posts a summary to the user's portal.

All steps are auditable because MCP tool calls are structured and logged.

Even with a solid protocol like MCP, it's easy to trip up during implementation.

Most issues aren't technical — they come from design choices or assumptions about how agents should behave.

Here are a few common pitfalls to avoid:

createTicket or fetchOrderStatus makes sense;/getAllUsers probably doesn't. The best MCP integrations focus on meaningful, task-driven tools — not database dumps.The Model Context Protocol isn't just another layer for integration. It's what makes AI more than just a chatbox trick;

it makes it a helpful assistant that knows what it's talking about.

This is where MCP really shines in real-world situations that have a big effect:

The Model Context Protocol gives you a common language for connecting conversational web agents to the systems they need to read and act upon.

Instead of reinventing integrations per bot and per vendor, MCP turns context and actions into reusable building blocks.

For tech leaders, that means faster delivery and cleaner governance.

For AI engineers, it means less glue code and better reliability.

And for customers, it means assistants that actually get things done—politely, safely, and in natural language.

If you're ready to ship an intelligent web assistant or modernize your AI-driven customer support with MCP, start with one workflow, stand up a secure MCP server, and add tools that are worth calling.

When you're ready to move faster, our team can help with architecture, implementation, and quality pipelines.

Explore /services/mcp-integration or /services/ai-chatbots to begin your rollout.

👉 Ready to move beyond FAQ chatbots? Ship an intelligent web assistant or modernize your AI-driven customer support with MCP. Explore /services/mcp-integration or /services/ai-chatbots to begin your rollout and build agents that truly act and connect.

Let's connect and discuss your project. We're here to help bring your vision to life!